Understanding a complex algorithm such as backpropagation can be confusing. You probably have browsed many pages just to find lots of confusing math formulas. Well unfortunately, that’s the way engineers and scientists designed these neural networks. However, there is always a way to port each formula to a program source code.

Porting the Backpropagation Neural Network to C++

In this short article, I am going to teach you how to port the backpropagation network to C++ source code. Please notice I am going to post only the basics here. You will have to do the rest.

First part: Network Propagation

The neural network propagation function is set by -bg-FFFFFF-fg-2B2B2B-s-1.jpg) where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function

where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function -1/(1-e-{-x})-bg-FFFFFF-fg-2B2B2B-s-1.jpg) as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function.

as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function.

Feed forward networks are composed by neurons and layers. So, to make this porting to source code easier, let's take the power of C++ classes and structures, and use them to represent each portion of the neural network with them.

Neural Network Data Structures

A feed forward network as many neural networks, is comprised by layers. In this case the backpropagation is a multi-layer network so we must find the way to implement each layer as a separated unit as well as each neuron. Let’s begin from the simplest structures to the complex ones.

Neuron Structure

The neuron structure should contain everything what a neuron represents:

- An array of floating point numbers as the “synaptic connector” or weights

- The output value of the neuron

- The gain value of the neuron this is usually 1

- The weight or synaptic connector of the gain value

- Additionally an array of floating point values to contain the delta values which is the last delta value update from a previous iteration. Please notice these values are using only during training. See delta rule for more details on /backpropagation.html.

struct neuron

{

float *weights; // neuron input weights or synaptic connections

float *deltavalues; //neuron delta values

float output; //output value

float gain;//Gain value

float wgain;//Weight gain value

neuron();//Constructor

~neuron();//Destructor

void create(int inputcount);//Allocates memory and initializates values

};

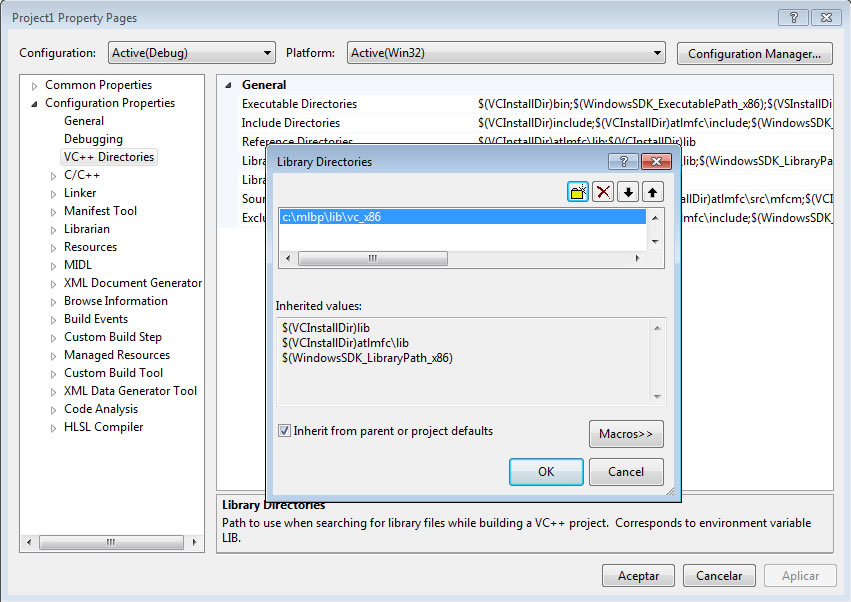

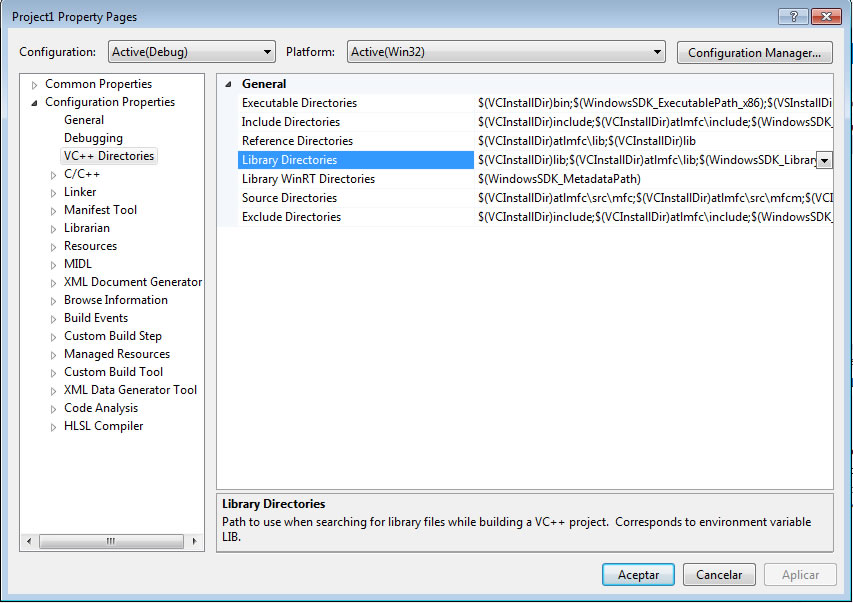

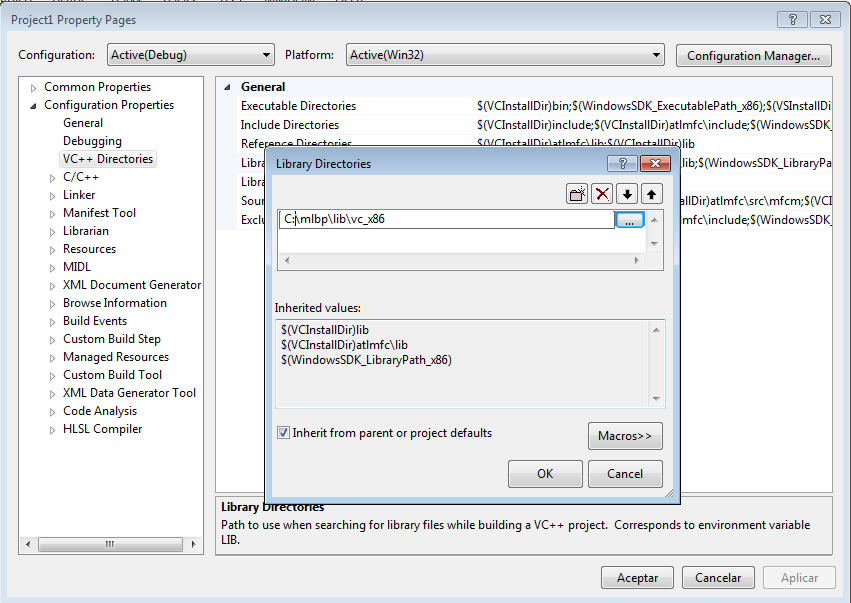

Layer Structure

Our next structure is the “layer”. Basically, it contains an array of neurons along with the layer input. All neurons from the layer share the same input, so the layer input is represented by an array of floating point values.

struct layer

{

neuron **neurons;//The array of neurons

int neuroncount;//The total count of neurons

float *layerinput;//The layer input

int inputcount;//The total count of elements in layerinput

layer();//Object constructor. Initializates all values as 0

~layer();//Destructor. Frees the memory used by the layer

void create(int inputsize, int _neuroncount);//Creates the layer and allocates memory

void calculate();//Calculates all neurons performing the network formula

};

The “layer” structure contains a block of neurons representing a layer of the network. It contains the pointer to array of “neuron” structure the array containing the input of the neuron and their respective count descriptors. Moreover, it includes the constructor, destructor and creation functions.

The Neural Network Structure

class bpnet

{

private:

layer m_inputlayer;//input layer of the network

layer m_outputlayer;//output layer..contains the result of applying the network

layer **m_hiddenlayers;//Additional hidden layers

int m_hiddenlayercount;//the count of additional hidden layers

public:

//function tu create in memory the network structure

bpnet();//Construction..initialzates all values to 0

~bpnet();//Destructor..releases memory

//Creates the network structure on memory

void create(int inputcount,int inputneurons,int outputcount,int *hiddenlayers,int hiddenlayercount);

void propagate(const float *input);//Calculates the network values given an input pattern

//Updates the weight values of the network given a desired output and applying the backpropagation

//Algorithm

float train(const float *desiredoutput,const float *input,float alpha, float momentum);

//Updates the next layer input values

void update(int layerindex);

//Returns the output layer..this is useful to get the output values of the network

inline layer &getOutput()

{

return m_outputlayer;

}

};

The “bpnet” class represents the entire neural network. It contains its basic input layer, output layer and optional hidden layers.

Picturing the network structure it isn’t that difficult. The trick comes when implementing the training algorithm. Let’s focus in the primary function bpnet::propagate(const float *input) and the member function layer::calculate(); These functions what they do is to propagate and calculate the neural network output values. Function propagate is the one you should use on your final application.

Calculating the network values

Calculating a layer using the -bg-FFFFFF-fg-2B2B2B-s-1.jpg) function

function

Our first goal is to calculate each layer neurons, and there is no better way than implementing a member function in the layer object to do this job. Function layer::calculate() shows how to implement this formula -bg-FFFFFF-fg-2B2B2B-s-1.jpg) applied to the layer.

applied to the layer.

void layer::calculate()

{

int i,j;

float sum;

//Apply the formula for each neuron

for(i=0;i<neuroncount;i++)

{

sum=0;//store the sum of all values here

for(j=0;j<inputcount;j++)

{

//Performing function

sum+=neurons[i]->weights[j] * layerinput[j]; //apply input * weight

}

sum+=neurons[i]->wgain * neurons[i]->gain; //apply the gain or theta multiplied by the gain weight.

//sigmoidal activation function

neurons[i]->output= 1.f/(1.f + exp(-sum));//calculate the sigmoid function

}

}

Calculating and propagating the network values

Function propagate, calculates the network value given an input. It starts calculating the input layer then propagating to the next layer, calculating the next layer until it reaches the output layer. This is the function you would use in your application. Once the network has been propagated and calculated you would only take care of the output value.

void bpnet::propagate(const float *input)

{

//The propagation function should start from the input layer

//first copy the input vector to the input layer Always make sure the size

//"array input" has the same size of inputcount

memcpy(m_inputlayer.layerinput,input,m_inputlayer.inputcount * sizeof(float));

//now calculate the inputlayer

m_inputlayer.calculate();

update(-1);//propagate the inputlayer out values to the next layer

if(m_hiddenlayers)

{

//Calculating hidden layers if any

for(int i=0;i<m_hiddenlayercount;i++)

{

m_hiddenlayers[i]->calculate();

update(i);

}

}

//calculating the final statge: the output layer

m_outputlayer.calculate();

}

Training the network

Finally, training the network is what makes the neural network useful. A neural network without training does not really do anything. The training function is what applies the backpropagation algorithm. I'll do my best to let you understand how this is ported to a program.

The training process consist on the following:

- First, calculate the network with function propagate

- We need a desired output for the given pattern so we must include this data

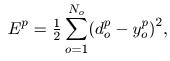

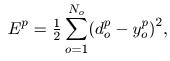

- Calculate the quadratic error and the layer error for the output layer. The quadratic error is determined by

where

where  are the desired and current output respectively

are the desired and current output respectively

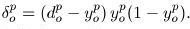

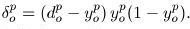

- Calculate the error value of the current layer by

.

.

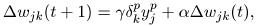

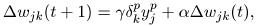

- Update weight values for each neuron applying the delta rule

where

where  is the learning rate constant

is the learning rate constant  the layer error and

the layer error and  the layer input value.

the layer input value.  is the learning momentum and

is the learning momentum and  is the previous delta value.

is the previous delta value.

The next weight value would be -w(t)_i -\Delta w(t-1)_i -bg-FFFFFF-fg-2B2B2B-s-1.jpg)

- Same rule applies for the hidden and input layers. However, the layer error is calculated in a different way.

.\sum\limits_{i-1}-n lerror_l . w_l-bg-FFFFFF-fg-2B2B2B-s-1.jpg) where

where  and

and  are the error and weight values from the previous processed layer.

are the error and weight values from the previous processed layer.  is the output of the neuron currently processed

is the output of the neuron currently processed

//Main training function. Run this function in a loop as many times needed per pattern

float bpnet::train(const float *desiredoutput, const float *input, float alpha, float momentum)

{

//function train, teaches the network to recognize a pattern given a desired output

float errorg=0; //general quadratic error

float errorc; //local error;

float sum=0,csum=0;

float delta,udelta;

float output;

//first we begin by propagating the input

propagate(input);

int i,j,k;

//the backpropagation algorithm starts from the output layer propagating the error from the output

//layer to the input layer

for(i=0;i<m_outputlayer.neuroncount;i++)

{

//calculate the error value for the output layer

output=m_outputlayer.neurons[i]->output; //copy this value to facilitate calculations

//from the algorithm we can take the error value as

errorc=(desiredoutput[i] - output) * output * (1 - output);

//and the general error as the sum of delta values. Where delta is the squared difference

//of the desired value with the output value

//quadratic error

errorg+=(desiredoutput[i] - output) * (desiredoutput[i] - output) ;

//now we proceed to update the weights of the neuron

for(j=0;j<m_outputlayer.inputcount;j++)

{

//get the current delta value

delta=m_outputlayer.neurons[i]->deltavalues[j];

//update the delta value

udelta=alpha * errorc * m_outputlayer.layerinput[j] + delta * momentum;

//update the weight values

m_outputlayer.neurons[i]->weights[j]+=udelta;

m_outputlayer.neurons[i]->deltavalues[j]=udelta;

//we need this to propagate to the next layer

sum+=m_outputlayer.neurons[i]->weights[j] * errorc;

}

//calculate the weight gain

m_outputlayer.neurons[i]->wgain+= alpha * errorc * m_outputlayer.neurons[i]->gain;

}

for(i=(m_hiddenlayercount - 1);i>=0;i--)

{

for(j=0;j<m_hiddenlayers[i]->neuroncount;j++)

{

output=m_hiddenlayers[i]->neurons[j]->output;

//calculate the error for this layer

errorc= output * (1-output) * sum;

//update neuron weights

for(k=0;k<m_hiddenlayers[i]->inputcount;k++)

{

delta=m_hiddenlayers[i]->neurons[j]->deltavalues[k];

udelta= alpha * errorc * m_hiddenlayers[i]->layerinput[k] + delta * momentum;

m_hiddenlayers[i]->neurons[j]->weights[k]+=udelta;

m_hiddenlayers[i]->neurons[j]->deltavalues[k]=udelta;

csum+=m_hiddenlayers[i]->neurons[j]->weights[k] * errorc;//needed for next layer

}

m_hiddenlayers[i]->neurons[j]->wgain+=alpha * errorc * m_hiddenlayers[i]->neurons[j]->gain;

}

sum=csum;

csum=0;

}

//and finally process the input layer

for(i=0;i<m_inputlayer.neuroncount;i++)

{

output=m_inputlayer.neurons[i]->output;

errorc=output * (1 - output) * sum;

for(j=0;j<m_inputlayer.inputcount;j++)

{

delta=m_inputlayer.neurons[i]->deltavalues[j];

udelta=alpha * errorc * m_inputlayer.layerinput[j] + delta * momentum;

//update weights

m_inputlayer.neurons[i]->weights[j]+=udelta;

m_inputlayer.neurons[i]->deltavalues[j]=udelta;

}

//and update the gain weight

m_inputlayer.neurons[i]->wgain+=alpha * errorc * m_inputlayer.neurons[i]->gain;

}

//return the general error divided by 2

return errorg / 2;

}

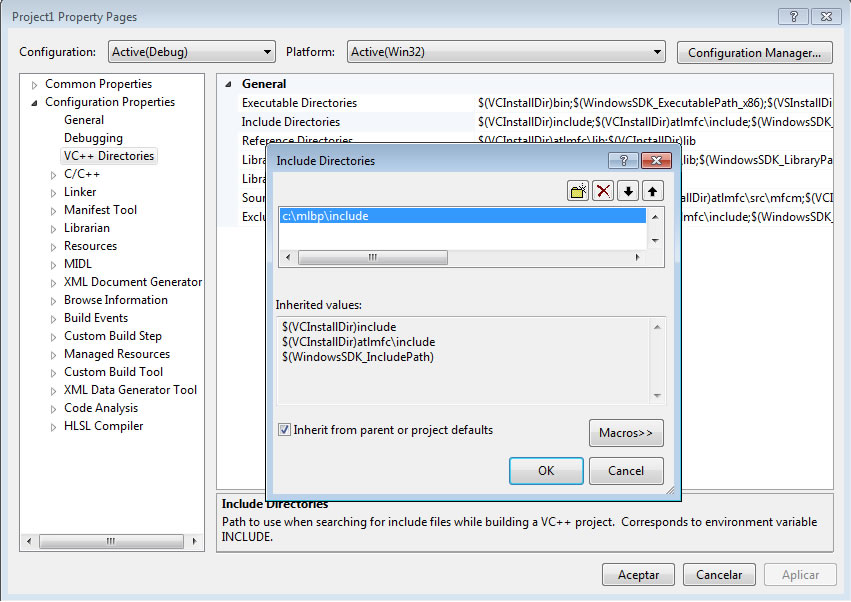

Sample Application

The complete source code can be found at the end of this article. I also included a sample application that shows how to use the class "bpnet" and how you may use it on an application. The sample shows how to teach the neural network to learn the XOR (or exclusive) gate.

There isn't much complexity to create any application.

#include <iostream>

#include "bpnet.h"

using namespace std;

#define PATTERN_COUNT 4

#define PATTERN_SIZE 2

#define NETWORK_INPUTNEURONS 3

#define NETWORK_OUTPUT 1

#define HIDDEN_LAYERS 0

#define EPOCHS 20000

int main()

{

//Create some patterns

//playing with xor

//XOR input values

float pattern[PATTERN_COUNT][PATTERN_SIZE]=

{

{0,0},

{0,1},

{1,0},

{1,1}

};

//XOR desired output values

float desiredout[PATTERN_COUNT][NETWORK_OUTPUT]=

{

{0},

{1},

{1},

{0}

};

bpnet net;//Our neural network object

int i,j;

float error;

//We create the network

net.create(PATTERN_SIZE,NETWORK_INPUTNEURONS,NETWORK_OUTPUT,HIDDEN_LAYERS,HIDDEN_LAYERS);

//Start the neural network training

for(i=0;i<EPOCHS;i++)

{

error=0;

for(j=0;j<PATTERN_COUNT;j++)

{

error+=net.train(desiredout[j],pattern[j],0.2f,0.1f);

}

error/=PATTERN_COUNT;

//display error

cout << "ERROR:" << error << "\r";

}

//once trained test all patterns

for(i=0;i<PATTERN_COUNT;i++)

{

net.propagate(pattern[i]);

//display result

cout << "TESTED PATTERN " << i << " DESIRED OUTPUT: " << *desiredout[i] << " NET RESULT: "<< net.getOutput().neurons[0]->output << endl;

}

return 0;

}

Download the source as ZIP File here. Please notice this code is only for educational purposes and it's not allowed to use it for commercial purposes.

UPDATE: Source code is available on GitHub too here is the link https://github.com/danielrioss/bpnet_wpage

-bg-FFFFFF-fg-2B2B2B-s-1.jpg) where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function

where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function -1/(1-e-{-x})-bg-FFFFFF-fg-2B2B2B-s-1.jpg) as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function.

as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function. where

where  are the desired and current output respectively

are the desired and current output respectively .

. where

where  is the learning rate constant

is the learning rate constant  the layer error and

the layer error and  the layer input value.

the layer input value.  is the learning momentum and

is the learning momentum and  is the previous delta value.

is the previous delta value.-w(t)_i -\Delta w(t-1)_i -bg-FFFFFF-fg-2B2B2B-s-1.jpg)

.\sum\limits_{i-1}-n lerror_l . w_l-bg-FFFFFF-fg-2B2B2B-s-1.jpg) where

where  and

and  are the error and weight values from the previous processed layer.

are the error and weight values from the previous processed layer.  is the output of the neuron currently processed

is the output of the neuron currently processed