By Andrew Ziegelstein

Formations of artificial neural networks completely alter the progression of human thought.Artificial neural networks (ANNs) represent models of information processors that resemble biological neural networks.While ANNs provide individuals more efficient ways of processing data, adverse results occur if machines interfere with human cognition.Development of artificial neural networks remains a fascinating element of scientific discovery, but this innovation brings about revolutionary changes that benefit and harm development of individuals’ intelligence.

Neural networks consist of cells known as neurons that transmit electrical impulses throughout the central nervous system.Individual neurons consist of dendrites, soma, axons, and myelin sheath.Dendrites receive signals from other neurons.The soma represents the cell body, protecting the neuron nucleus.Axons act as terminals for electrical impulses, with the myelin sheath acting as an insulator.Certain neurons perform specific tasks, such as transmitting signals from sensory or motor organs to the brain (Wood & Wood & Jones, 2006).Multiple neurons transmitting data for a specific purpose form a neural network. Modern scientists continue to improve on creating ANN models that duplicate the phenomena of biological neurons, enabling inventors to create machines that perform humanlike tasks.

According to psychologist George A. Miller, cognitive science began on September 11, 1956 at the Massachusetts Institute of Technology (MIT).The Symposium on Information Theory officially documented discussions about artificial intelligence.Students at DartmouthCollege began to develop programs to solve problems, recognize patterns, play games, and reason (Gardner, 1987).Scientists apply artificial neural networks in speech, image analysis, and robotics.Others utilize ANN setups to provide mathematical models of biological neural networks.Regardless of purpose, the creation of artificial neural networks remains complex.

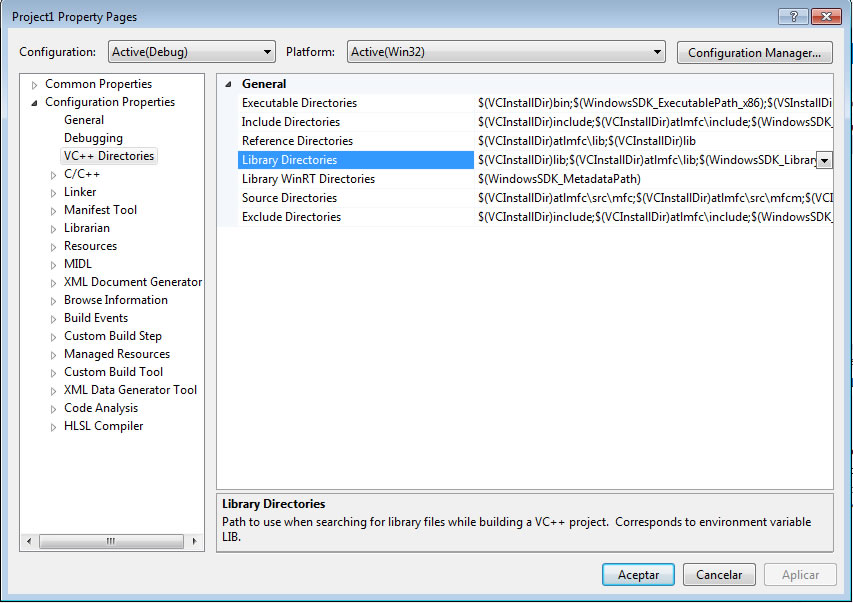

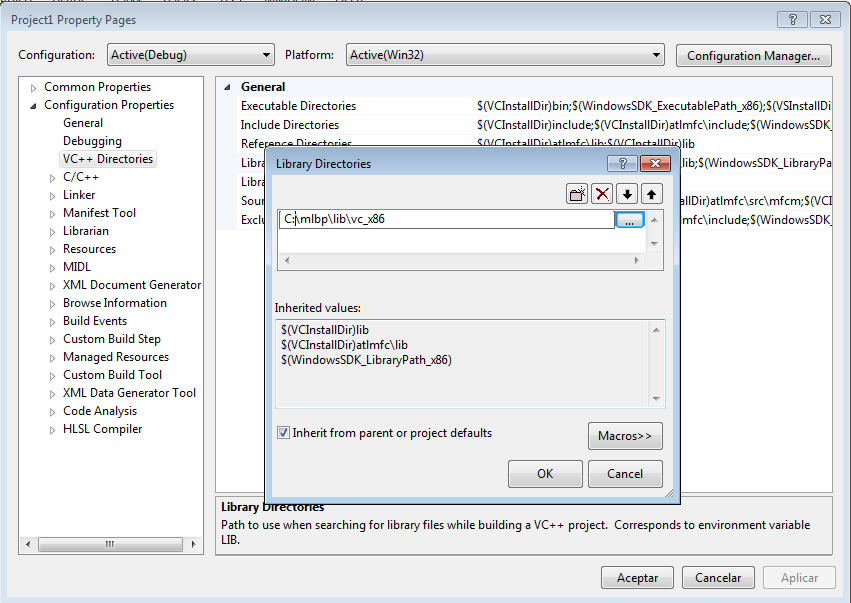

Scientists mathematically define artificial neural networks by models similar to Figure 1.First, inputs (X0à Xp) combine with synapses of the neuron represented as weights.These weights transmit throughout the neuron, eventually combining at a “summing junction” ∑. Electronic impulses relay this compilation to the “activation function” that sends out the desired output.The equation in Figure 1b defines biological neurons’ processes through a mathematical function (Rios, 2007-2008).In a biological network, the “summing junction” represents the spinal cord, while the “activation function” serves as the brain.OutputYk represents the reaction the brain forces a person to perform.

While scientists know what artificial neural networks consist of, researchers disagree on whether or not there exists a “central planner” that collects information from any location in the system.Andy Clark states that in the human body, the brain represents a “central planner,” and experiments prove the organ’s ability to comprehend multiple sources of data.Clark notes that in certain ANNs, independent devices employ themselves in separate locations, each with an individual pathway to convert sensory inputs into actions.However, Clark believes that for an ANN to act like a human, there must be a centralized network similar to the brain.Humans connect sensory input together when hearing, touching, and seeing at the same time.Also, Clark disagrees with the theory that transmitting information to a centralized area like the brain adds significant lengths of time in computation.Through methods such as ballistic reaching, preset trajectories, and motor emulation, Clark believes that brains log repetitious actions, decreasing the time it takes for the brain to recognize what is occurring.When the brain identifies what it must do, the signal transmits to the output device.By employing Clark’s theories, researchers limit the time it takes for computers to correct errors, enhance the overall speed of the system, and enable the neural network to make more consistent output (Clark, 1997).

Discoveries in neuroscience lead to intriguing inventions in artificial intelligence and provide humans with computational power unrivaled in the past.As an example, computers prove theories proposed by mathematicians hundreds of years ago.Solutions to the approximate sum of an infinite series of numbers could only be deciphered by a writing utensil and paper.Only individuals blessed with minds like Newton or Einstein contemplated how to solve these problems, but today, ANNs aid all people with a scientific calculator in resolving an infinite series by hitting a few buttons.

Andy Clark, Director of the Cognitive Science Program at IndianaUniversity, describes how digital technology enables humans to ignore distance as a limiting factor of production.At DukeUniversity, researchers discovered patterns of neural signals throughout the brain of an owl monkey.Once documented, these patterns entered a computer that predicted the future movements of the neural networks.Signals from the monkey brain transmitted throughout the computer and controlled a robotic arm receiving the signal 600 miles away at the MIT Touch Lab.Dr. Mandayam Srinivasan, director of the MIT laboratory noted that the experiment provided the monkey brain with an arm 600 miles away (Clark, 2003).With this type of technology, organizations like NASA possess the ability to control probes in other areas of the solar system, and human knowledge bases extend further than their physical area.

Andy Clark views a cell phone as another link for a person to theoretically be in two places at once (2003).Hundreds of years ago, the actions of a human in one area would not effect a situation far away.Today, an individual can eat lunch, run their business in America, and deal with foreign import companies at the same time.While these artificial networks enhance the ability to focus on multiple projects, they divide the person’s attention span into separate places.This leads us to the detriments of artificial neural networks, specifically the fact that it separates humans from actual experience.

Technological advancement revolutionizes the lives of people across the world, but only time will tell whether or not discovery enables humans to advance.Our fear of Y2K did not stir enough controversy to scare people from reliance on electronics.If all computer systems crash, the world economy will collapse.However, the greatest controversy regarding the development of artificial neural networks in particular involves whether the progress limits the comprehension levels of human beings (Rieder, 2008).

For an artificial neural network to function, it seems that some sort of sensory mechanism must employ itself in the machine.According to German philosopher Immanuel Kant, “any phenomenon consists of sensations, which are caused by particular objects themselves.”These sensations help the mind create schemas, mental representations of objects or places (Gardner, 1987).Through computer imaging and digital technology, humans study realms of knowledge on scales larger and smaller than what the typical individual understands.Without technological aids, the concept of space would not exist.However, when humans rely on technology to study, they lose the direct connection to their project, creating only an abstract assumption of what truly goes on.In the experiment linking the monkey brain to the robotic arm, electronic impulses transferred from the monkey’s brain to the robot.This connection replaces human touch, eliminating that aspect of the human experience.Growing accustom to a strictly digital education results in a human’s inability to learn through varied methods, including a classroom environment.

The eventual goal of any project is growth, and students seek to expand their knowledge base by attending college.However, modern universities struggle to educate learners in lecture formats.Professor Michael Wesch of KansasStateUniversity created a research video with the assistance of two hundred students in his anthropology course.The video documented the survey results of 133 students at the institution, and the results seem to prove that college students resist reading and believe that coursework contains little to no relevance to their lives.Students claim that on average, they write 42 pages in papers each semester, but type 500 e-mails.Further support that electronics and digital technology prevent humans stems from students stating that they will view approximately 1,281 Facebook profiles in a semester, and read only 8 books in a year.The data seems to coincide with a theory presented over 1,500 years ago (Wesch, 2007).

According to Professor Wesch:

there is no question that the 200-seat lecture hall is, and always has been, an inferior model on which to base a system of teaching and learning…It is a rejection of the dialectic approach of the Academy, dominant until 529 AD when it was finally closed by the Emperor Justinian…It is an authoritarian continuation of the ‘Scholastic’ tradition founded by the early Church ‘schoolmen’ and continuing through the middle ages.It is a 9th century, not a 19th century environment…The problem is imposing a 16th –19th century epistemology on an ill-prepared digital-age mind (2007).

The results of the survey coincide with this theory, leaving no question as to why KansasStateUniversity students appear to lack interest in scholarly endeavors in classes with an average of over 100 students (Wesch, 2007).

In further support of why college students struggle, researchers must consider developmental differences throughout various generations.Generations within the last 100 years grew up turning to the radio and library for entertainment.Verbal and written communication served as the normal trigger to stimulate attention.Currently, infants are exposed to digital media from television and the computer.The idea of a biological neural network now comes into play, as flashing lights and sounds of television shows like “Dora the Explorer” signal the brain to pay attention.Wesch states that“[the] powerfully presentational structure of visual images, movement, light, color, and non-verbal sounds and music makes sustained propositional thought difficult for the students of the digital age (2007).”After encouraging a child to pay attention to the unique bells and whistles of “The Wiggles,” text will not trigger brain stimulation.Biological neural networks in modern age students rely on intricate visual and audio triggers to stimulate brain attention, whereas students from past generations rely on basic verbal and written communication.Perhaps prolonged exposure to digital age technology creates a new attention deficit disorder towards teachers’ words and blackboards.An Australian survey found that people who watch less than 1 hour of television per day do better on memory tests than those who watch more.“Couch potatoes,” individuals who watch television for hours on end, increase their risk of developing Alzheimer’s disease as the brain literally zones out (Tesh, 2008).While these facts present themselves repeatedly, artificial neural networks and digital media continue to impede upon development of biological neural systems.

As Professor Wesch implies, the same theories proposed prior to the year 500 AD apply to researching the quality of college institutions (2007).Some believe that universities with a low faculty to student ratio have below average educational programs.The personal attention given by scholars like Aristotle, Socrates, and Plato remains an important aspect of education.Justinian disowned the methods taught by the Academy because it was more important to industrialize education than actually create systems that enhanced enlightenment.Military societies did not need smart soldiers, so they rushed students through school and onto the battlefield.Obviously, modern college education represents commercial investment when hundreds of students cram into lecture halls where they have limited opportunities to learn.Ironically, our sophisticated digital systems make education more difficult for masses of people.

If Thomas Kuhn studied the change in educational success, he would note the paradigm shift in the functioning of human neural networks.Artificial neural networks transmit data in ways not previously experienced by humans, and while modern machines process information faster than ever before, the new technologies limit the abilities of biological neural networks.Even with these facts, artificial neural networks play a critical role in the development of humans, creating biological neural pathways that cannot comprehend education through classroom reading, listening, and writing.Only the future will tell whether ANNs lead to human success or failure.

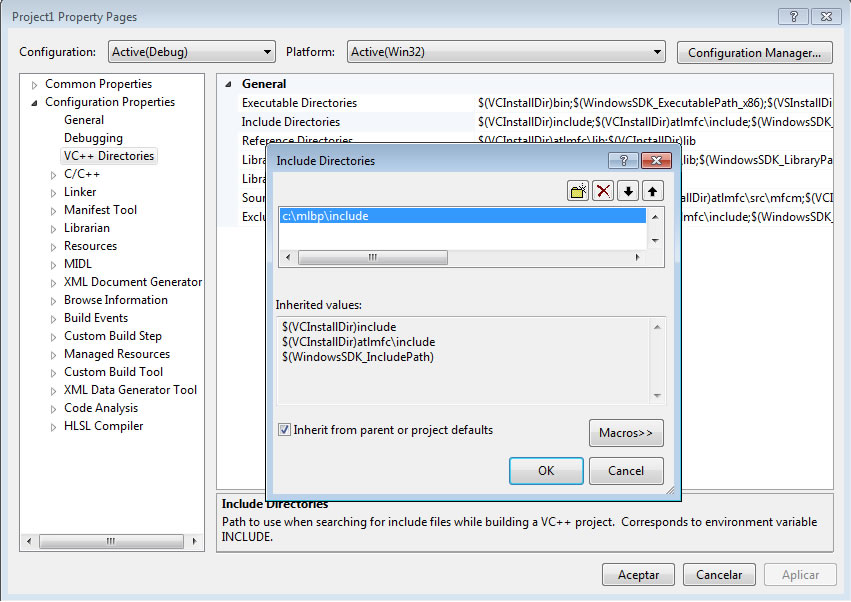

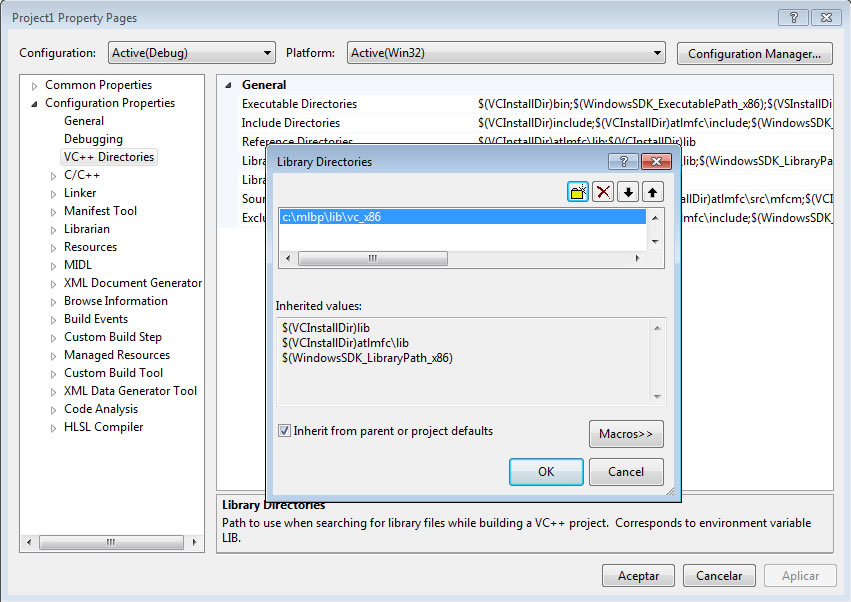

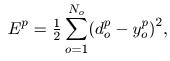

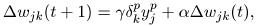

-bg-FFFFFF-fg-2B2B2B-s-1.jpg) where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function

where net is the output value of each neuron of the network and the f(x) is the activation function. For this implementation, I'll be using the sigmoid function -1/(1-e-{-x})-bg-FFFFFF-fg-2B2B2B-s-1.jpg) as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function.

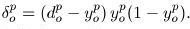

as the activation function. Please notice the training algorithm I am showing in this article is designed for this activation function. where

where  are the desired and current output respectively

are the desired and current output respectively .

. where

where  is the learning rate constant

is the learning rate constant  the layer error and

the layer error and  the layer input value.

the layer input value.  is the learning momentum and

is the learning momentum and  is the previous delta value.

is the previous delta value.-w(t)_i -\Delta w(t-1)_i -bg-FFFFFF-fg-2B2B2B-s-1.jpg)

.\sum\limits_{i-1}-n lerror_l . w_l-bg-FFFFFF-fg-2B2B2B-s-1.jpg) where

where  and

and  are the error and weight values from the previous processed layer.

are the error and weight values from the previous processed layer.  is the output of the neuron currently processed

is the output of the neuron currently processed If you think learning all about neural networks is really easy, well I would tell you really need to give it some time and have patience to understand their complexity.

If you think learning all about neural networks is really easy, well I would tell you really need to give it some time and have patience to understand their complexity.