In this small short document I’ll show you how to quickly setup the backpropagation library for you application.

Visual Studio Users

First off, you have to get the library. If you don’t have it you can get it here.

- Start a new project on your visual studio IDE.

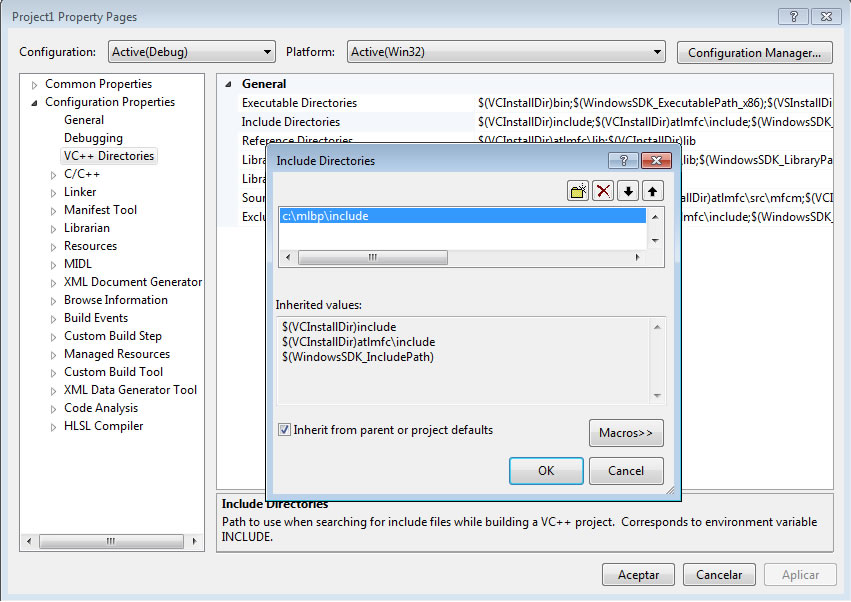

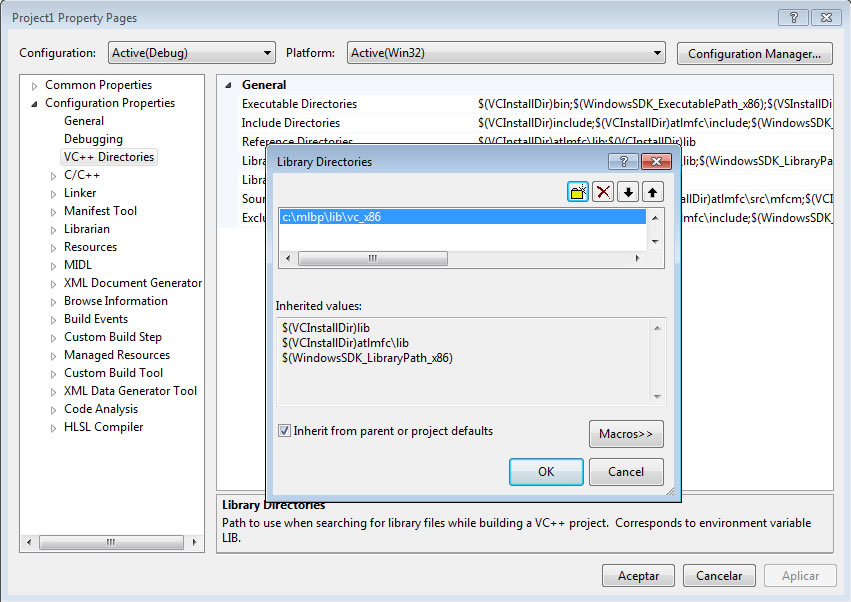

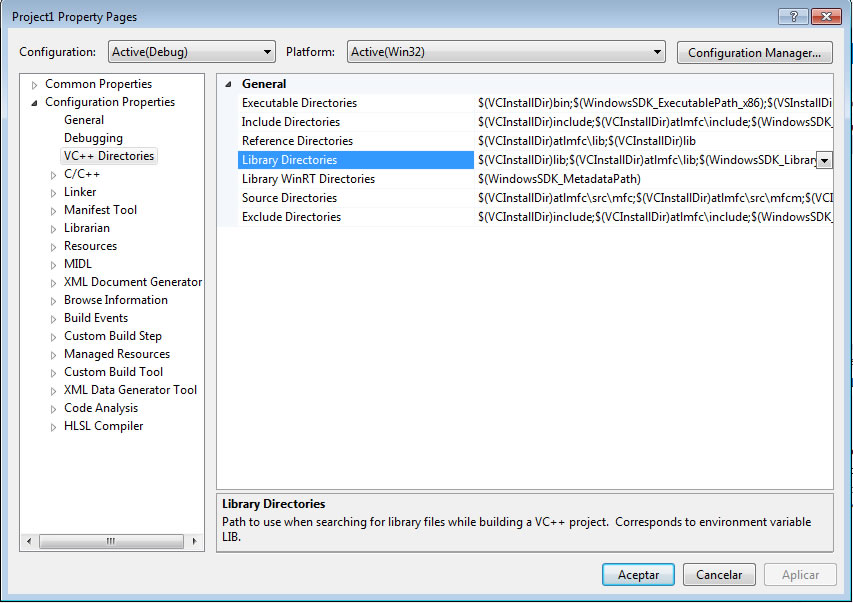

- If you are having the MLBP libraries in a different folder than your project, make sure to include the (.h) header file location to the folder in your Visual Studio Configuration. Make sure to include libs folder too.

- Defining Global Macros: If you want to use the double precision library make sure to include into the global defines, the macro DOUBLE_PRECISION. I also recommend including the macro SHARED_LIBRARY. This in case you get linker errors.

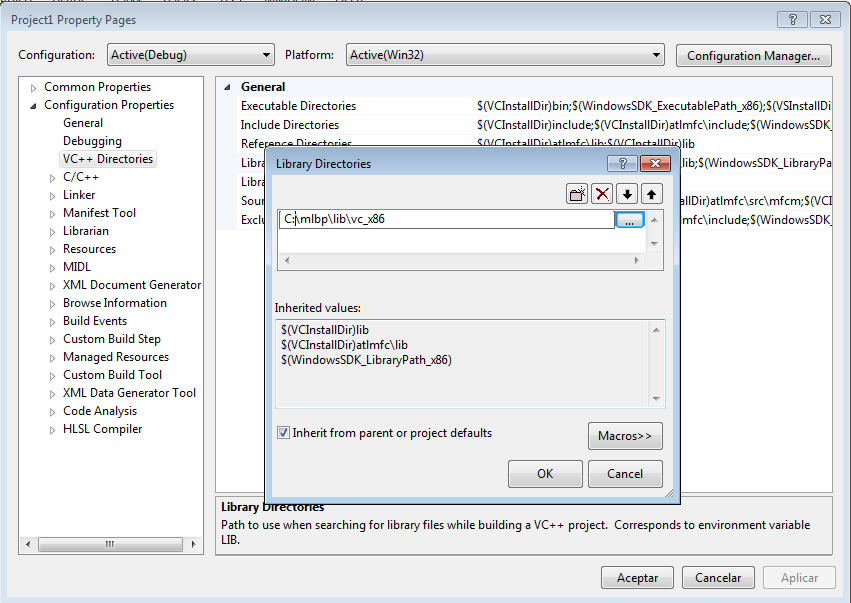

- Adding libraries to project: In order Visual Studio may find which library it should link to, first, in your project properties -> VC++ Directories, add the directory where the .lib files are stored.

- Add the macro #pragma(lib,”mlbp_stsfp.lib”) or #pragma(lib,”mlbp_stdfp.lib”) if you enabled DOUBLE_PRECISION on any of your source files.

Qt Creator Users

- Start a new project on Qt Creator.

- On the PRO file make sure to include with INCLUDEPATH the folder where all header files location. Example: INCLUDEPATH+=c:/mlbp/include

- Include the linking library using with LIBS. Example: LIBS+=c:/mlbp/libs/vc_x86/mlbp_stsfp.lib

- Defining Global Macros: If you want to use the double precision library include this statement: DEFINES+=DOUBLE_PRECISION. If you are having linker errors include macro SHARED_LIBRARY.

Now you are ready to start coding your project.

Initializing Neural Network

1. First, you have to set the name space where the library is grouped: mlbp;

using namespace mlbp;

2. Declare a variable as a bp object: the neural network object.

bp net;

3. Initialize neural network to your needs with function bp::create()

if(!net.create(PATTERN_SIZE,OUTPUT_SIZE,INPUTNEURON_COUNT))

{

cout << "Could not create network";

return 0;

}

4-Incialize all neuron weights to random values with function bp::setStartValues()

net.setStartValues ();

5-If you have the multithreaded version of the library and you want to use it you can do it by calling function bp::setMultiThreaded(true);

Network ready for training

Now you have, the network is ready for training. Use function bp::train() or any variation of it to start training the network. The train function is used inside a loop and all training patterns must pass inside that loop. The idea of this loop is to wait until bp::train() returns an error near close to zero.

You can do it in this way.

while( error < 0.001f )

{

error=0;

for(int i=0;i<patterncount;i++)

{

error+=net.train(desiredOutput[i],input[i],0.09f,0.1f);

}

error/=patterncount;

cout << "ERROR:" << error << endl;

}

Or you just can do it by setting a fixed number of iterations:

for(int i=0;i<45000;i++)

{

error=0;

for(int i=0;i<patterncount;i++)

{

error+=net.train(desiredOutput[i],input[i 0.09f,0.1f);

}

error/=patterncount;

cout << "ERROR:" << error << endl;

}

I recommend using the second way because in the first one if by any reason the network never reaches that minimum value it would hang in an infinite loop.

After Training

Training is usually intensive when you do it with large patterns. And even more when you include many of these patterns. So you may want to save all you have done. There are two ways to do it.

Using Save Function

The easiest way is by using the bp::save() function.

if(!net.save("network.net",USERID1,USERID2))

{

if(net.getError()==BP_E_FILEWRITE_ERROR)

{

cout << "Could not open file for writing";

}

else if(net.getError()==BP_E_EMPTY)

{

cout << "Network is empty";

}

}

Getting a linear buffer of the network

The second way is easy too and useful if you want to save the network in your own file format. You only have to call function bp::getRawData() and pass it to a bpBuffer. Use function bpBuffer::get() to get the buffer as an unsigned char array. In this way you can save the buffer on a personalized file.

bpBuffer buff;

buff=net.getRawData();

if(!buff.isEmpty())

{

FILE *f;

fopen("yourfile.dat","rb");

fwrite(buff.get(),sizeof(char),buff.size(),f);

fclose(f);

}

To create a network from a buffer, just load a bpBuffer with the unsigned char array and use bp::setRawData(bpBuffer).

bpBuffer buff;

unsigned char *ucbuffer;

unsigned int usize;

LOAD ucbuffer here

.....

buff.set(ucbuffer,usize);

if(!net.setRawData(buff))

{

if(net.getError()==BP_E_CORRUPTED_DATA)

{

cout << "Invalid buffer";

}

//...

}

Using for Production

If your application won’t perform training every time it is used by the final user, or if it just would do it once. You only have to change the steps of starting random values of the network and training to just loading the neural network data from a file, and call bp::run every time your application would need the services of the neural network.